Google BERT Update | Big Change To Understand The Search

Google rolled out BERT (Bidirectional Encoder Representations from Transformers) update at the end of October month 2019. Yes! Now Google can better understand your search prospects.

Google BERT is a neural network concept-based natural language processing technique which is mainly used for the machines and it is a part of artificial intelligence and machine learning. The main uses of this method are to better understand the user response. Google has already introduced this last year and now implemented this to improve its search pattern.

All of us come to the Google search bar for the solution of our query but just think if it returns different results as per your query then what you will do? You will become frustrated!

Mr. Pandu Nayak (Google Fellow and Vice President) says that the Google Research team received billions of search queries and 15% out of that is unique. It means that search query found the first time as per our search database. This type of search contains long-tail keywords and Google can’t be understood that queries but after the release of BERT, Google can now serve better results for those queries.

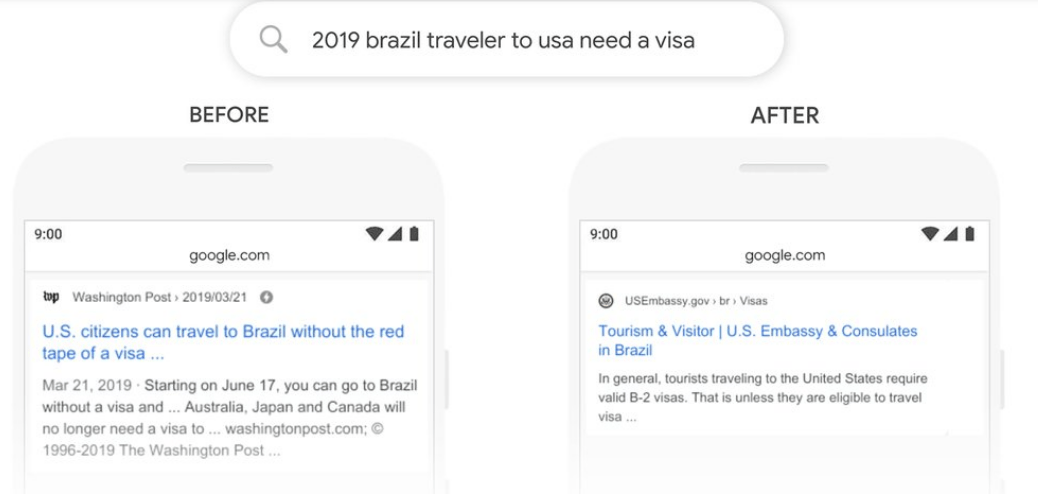

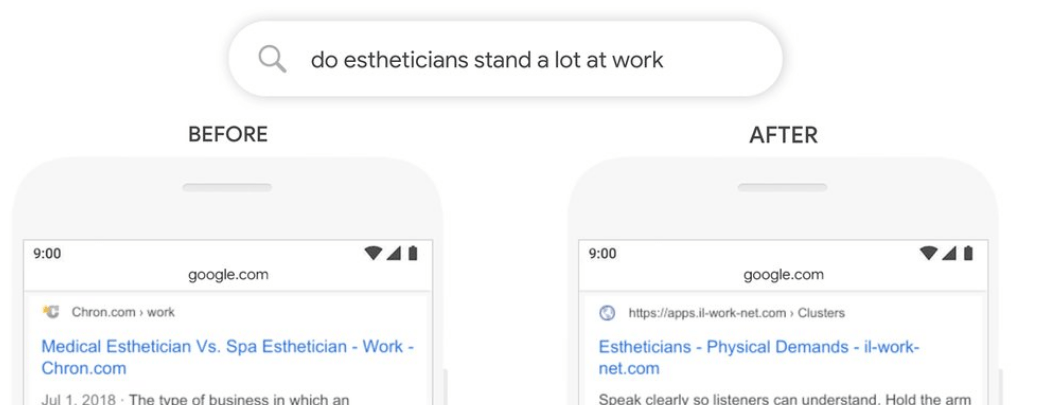

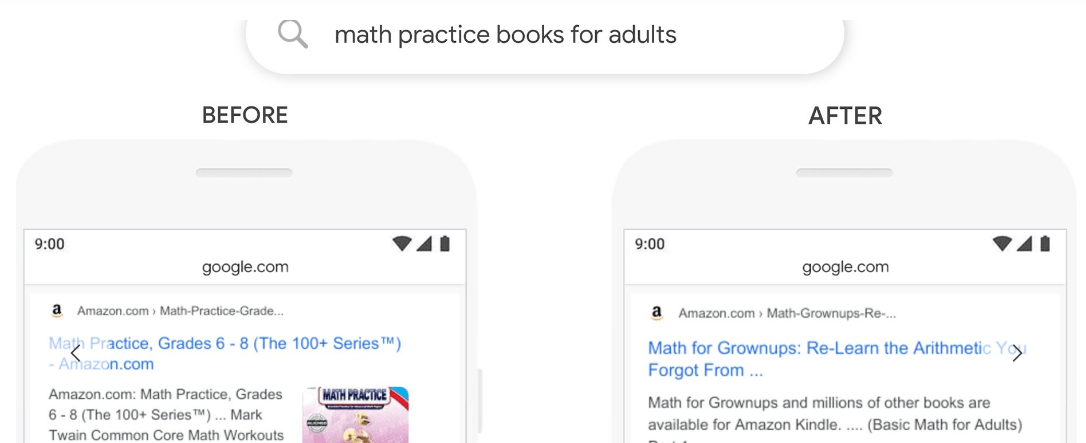

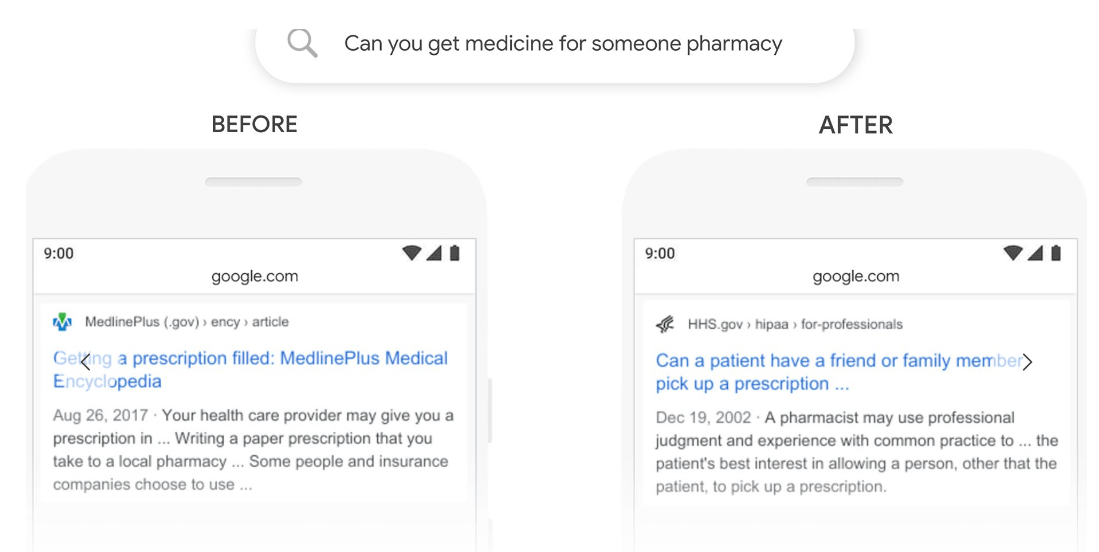

Here you can see some of the examples of search changes before and after the release of BERT.

Example 1

Example 2

Example 3

Example 4

Previous days, Google won’t be able to better understand the long-tail query which contains “for”, “to”, “what” and “in” type context.

It’s difficult to understand the user prospect every time!

Yes! Google is a search engine machine, not a god! so it’s not possible to give you better results every time. Google says that the BERT update will help to serve better results but not every time. There is still most of the queries present in which we are not displaying the better response.

What you can do?

Google already stated that this is not a penalty update it is just for improving search and it also gives advice for your web page ranking. The following are the techniques you can use to take advantage of Google BERT.

- Use proper schema markup

- Include long-tail keywords

- Try to solve the user queries with examples

- Use “how to” “Which”, “Why” Type contexts

Conclusion

Understanding user language is an ongoing challenging task and Google continuously keeps updating its algorithms and ranking factors to serve better results. We hope Google BERT will enhance the natural language and gives accurate information for us!